Now, this post is going to require some nuance on a currently rather touchy subject. But let’s start with saying I’m not gonna tell you how great ChatGPT, CoPilot, and all those other big AI systems you see everywhere are. If you’re looking for a reason why it’s actually not so bad, this is not it. But at the same time, if you just want to read how bad and evil AI is, this is going to be it either. Because fact is, I’m quite fond of it and find it quite sad it’s getting such a bad reputation because of the bad usage promoted by the big AI-companies and other big tech.

Also woops, this one got lengthy again. But I swear I tried to keep is simple, but I’m not a great explainer (it why I will likely never become a teacher). In fact, I wanna put a disclaimer that because I tried to keep it simple, I’ve simplified a lot of stuff. If you know how AI works, you will likely say a few times “well technically that works different”. But if you know how AI works, you likely don’t need to read much of this either so I’m going for a slightly different public. But perhaps you know how it works yet still curious about my takes/opinions. In that case, read along but keep that in mind 😉.

I got interested back when I was 12, after seeing a video of the HRP-4C back when YouTube was still fun and gave you the most random shit ever. I’m nearing 30 now, it’s been some time. But it got my interest and was part of why I started to study software engineering more. I thought especially human-like AI and HRI (Human Robot Interaction) was insanely cool wanted to make AI systems like that. I still think it’s cool, but somewhere in my twenties when I nearly finished university I figured in it’s core it hadn’t changed much since the “70s and got bored with it. That’s when I switched my efforts to the field that I was already hobby’ing in, privacy and security where I funny enough found a few more people who had the same experience. Either way, despite being bored with it as something to spend 40 hours a day on I’m still quite interested in it as a topic.

And once again: reactions can go on this Mastodon post (or just @ me there in general).

What is AI?

Anyway, let’s start at the start. What is AI? Well, technically anything perceived as smart. In that sense, what is AI isn’t a set definition. Once, a mouse pointer switching from click icon to input icon was AI. Now, it’s the most normal shit ever. It’s also why I find terms as “AGI” dump. We already had ideas of what we consider “AGI” far before that term, and we just called it AI. It just feels like buzzwords for marketing. Since AI means nothing specific, I much rather talk about the type of technology used. Currently, the most common ones are ANNs (Artificial Neural Networks), of which LLMs (Large Language Models) and most other currently used AI are subsidiaries. Either way, when talking about all kinds of technologies we consider “AI” or want Average Joe to understand what we mean, the term can be handy so let’s stick with it for now. Also, while most people who stop to read this probably already know this, I find it important to mention that this technology is nothing new. It has existed since late “60/early “70’s. Indeed, OpenAI/ChatGPT did not invent “AI”.

The issues with current AI

I’ve been thinking for a while if I should start with the good or the bad, but I’m gonna start with the later. Because as I said, I’m not a fan of (most) modern usage of AI. I’m sure you’ve heard people complain about it online already, but I’m still going to list them:

- It’s security & privacy hell. Sensitive data gets uploaded to the servers running the ANN models trough the prompts send to them, as well as ending up in training’s data causing them to be repeated to who knows who (because an ANN cannot be controlled with full certainty, no matter how many safeguards you make). Really you could write a whole blog over that alone, maybe one day, but considering we’re all being warned left and right about this one even if we still ignore it half the time and even the big tech companies can’t deny this about their AI products themselves, there is little to discuss on the “is it bad or good” part. We all kinda agree it’s bad already.

- It’s being used everywhere simply and only because “it’s cool”. True as that might be, and as much as I love to follow the rule of cool, it cannot and should not be applied everywhere in real life. Running these models requires a lot of energy, and often similar or even better results can be achieved with less “cool” technologies. And while I’m not someone who cares much for the environment, even I cannot agree to doing that simply to be able to sell saying you use some hyped buzzword techniques.

- There is a lack of transparency. AI systems are used in secret, sometimes faking entire humans without telling you. Think of that totally real person the webshop’s chatbot sends you to that was just a better chatbot with a human’s picture (looking at you Amazon), artists listed as a human being but being fully AI generated, etc.

- People will create unhealthy relations with these systems. This one is not just related to current AI, but anything that’s getting smarter and personal will have it. And despite my love for human-like AI and HRI, or maybe because of it, it’s actually my biggest worry with AI in general even when it’s done right (although with it being done as it is now, privacy & security is tie’ing with my worries). It’s something that requires a delicate approach for sure, and that isn’t easy to solve if we do want our fun and useful healthy relations with the systems. And to be clear, relations aren’t only interpersonal ones in the sense of “AI is my friend/partner/whatever” but contain every interaction we have with it.

- It’s used in all the wrong moments, for all the wrong things. Now this is one I wanna get into more so it’s getting it’s on chapter.

You might notice I didn’t mention all the copy-righted things in training’s data, and that’s because I have more double feelings on that. On one side I get the issue, and I cannot deny using copyrighted materials to train breaks copyright laws. But at the same time, knowing how a ANN learns, I find it hard to entirely hate. It is based on how we as humans learn. You often hear “but we have fantasy and an ANN doesn’t” but I expect the truth to be much less harshly defined. ANNs don’t copy-paste from their training’s data, but deduct patterns in it. We humans do that too, but our “training’s data” is everything we see in our lives. Every concept we understand, is because we’ve connected it to something. And even most things our imagination creates uses these concepts to put them together. A very simple example is a dragon. As some kid here in Europe to draw a dragon, and you likely get a giant fire breathing lizard with wings. Ask the same thing to some kid in Asia and you likely get a water wielding snake with moustache whiskers. So I’m not sure how to feel about that point yet. However, I do want to say we should at least apply the same rule to everyone, and if anyone gets favoured it should be small businesses (for example, to create a fair play field against monopolistic powers) and not big ones. If those big corps want to use copyrighted work for training, you also don’t get to complain when it copies you yourself. Don’t cry when it hits you as big multinational instead of hitting some small business.

I’m also not convinced “AI-art” is bad per se. While I haven’t used AI to create “art” in the broadest sense myself (indeed, even this blogs feature image isn’t AI generated. It’s some open licenced pictures I edited together in Krita then put text over) I don’t mind it much either. I remember people complaining digital music wasn’t real art and I kinda feel similar here. Sure, AI-art might take less effort, but it also gives you less control and tend to give much more generic results. But in some cases, it’s fine to be generic. In other cases, it’s not and that’s where human-made art shines. Let’s take music, for generic meaningless things or just simply humour-based stuff AI generated music is fine. But what about that artistic masterpiece? Those tend to be from the artist that do such an odd things with music they get declined record deals everywhere before someone let them go trough, just to have that one record label who did sign them hit jackpot (and for each such happening, many artist’s really where just too odd to break trough like that). These kind of odd, new things are rare to be made by humans, and will be even more rare to come out of machines. Furthermore, things that hold deep meaning will never be fully machine-made because we humans will always get some distance when we known an AI is in play (and really, while I don’t mind AI-art I am all for transparency).

AI costing jobs is also something I’m not too worried about. Unlike what a lot of companies like to think, most human jobs won’t be replaced that easy. We’re already are seeing companies slowly realising this after shit keeps going wrong when trying to make AI is replace humans. Rather, it’s a tool to be used. Some jobs might disappear (be it fully, or be it that less people are needed to execute it) but that happens with every new technology, and new jobs get created in place for those.

All the wrong moments, for all the wrong things

As I said, I wanna get more into this one as it has quite some sides, but in this chapter I’m also gonna try to explain a few complex things in an easy way as they are needed to understand why things don’t work that way (and I try it simple because people who understand the complex explanation don’t really need my explanation). But I’m not the best at explaining, especially not complex things in a simple way, so if it becomes a lot of blabbering, my apologies.

But let’s start by saying this is an issue that I’d largely like to blame on big tech and big AI-companies. AI, especially but not limited to LLMs, are getting thrown in your face everywhere. AI in your OS, your office, your notepad, your email, your ticket systems, scrum boards, videocalls. Name it and it gets AI. But most of the time, it’s getting completely ignored what these systems do. They get presented as knowledge machines and search engines. But they’re not. They don’t know shit. Well, they know one thing. In case of an LLM, they know what kind of sentence usually follows the previous sentence. That’s it. But they have no clue about the meaning of the words they generate, let alone if they make any sense. But AI is also complex, and you cannot expect Average Joe to know exactly how it works. So when they get it presented for all these usages, and it seems to work most of the time, it’s hard to make them understand it’s not useful for that.

And let’s be clear, sometimes it can be handy to use a technology for something else than it’s intended use. AI systems are not an exception in this. But it’s also important to realise when you’re doing this, and that there may be unwanted side-effects. Those need to be brought in scope and taken into account at all times. And that doesn’t happen currently. Instead, we’re taught this is what it should be. It kinda reminds me of VPNs, where people think it’s there to give them privacy not realising what a VPN is and what it’s really for. And with that, people start to use it in ways that harm their privacy instead, routing all their internet usage trough scam-y servers that sell that data because they heard about it from some influences. And with AI, the issue is much more wide-spread and causing way more harm.

I mentioned before that these AI systems are be based on how we think our human brains work, but there is a difference with humans. Not in that we have creativity and personality while they don’t. In the end, cru as it sounds, we’re just a bunch of neural connections and chemical reactions too. But a big difference is that an ANN is build for one task only, and we in our brains have I-don’t-even-know-how-much of these connections for different tasks, who can then also have connections to work together. The best explanation I can give is that an ANN is like a sandboxed piece of brain. You cannot expect that to do all the tasks our brain does. And important to know in that, is that despite it’s unpredictable despite being only a piece of logic (after all, computers are still just a bunch of switches going off or on, and even when quantum becomes a thing it’s still logic. Just the math becomes more complex). There is no way to know 100% sure what output an ANN will give. Why? Well, there is a lot of complex things we can say about this happens but let’s keep it easy. Going by the easy “it works like how we think our brain works” thing, just think of the fact that we also don’t fully understand our brains either. Perhaps we some day will find a way, but we might as well never figure it out.

It’s good to realise this, because then you also realise that “hallucinations” aren’t the software not doing what it should do. It does what it should do. Sticking with the LLM (but you can say things like this about other ANNs as well): the sentences are grammatically correct ones that make sense as follow up on the previous one. Verifying the information inside the sentences was never part of it’s job. It just happens to often enough be correct (or even just seemingly correct) that it makes sense. And you might think reasoning models will improve this, having to explain their bullshit. But so far, they just have shown to give more “nationalisations” rather than less. Point is, they don’t know shit but how to say whatever happens to come out in the most convincing way possible. In a sense, it’s like a master manipulator: talking about stuff he knows nothing about while being able to say it in such ways it makes you believe he’s right and very knowledgeable.

Same can be said for bias. Every model will have bias, based on what it learned from. Simply said: if the training contains more yes’es than no’s, it’s much more likely to say yes. Because statistically, that’s what’s happened the most. You might think, but if something happens the most, doesn’t that make it likely true? Well not really. Humans are biased, each and every group and culture is biased. That’s ok. But each human, group and culture does have a different bias. It’s impossible to make training’s data that contains an even amount of each possible bias, and as such the models will take over certain biases even if you try to make a bias-less system. Even worse, one could choose a bias to train the most on, and influence the model. Which then influences all the users of that model by presenting that as fact more often. And we already see this happening. The Dutch tech site Tweakers did a nice little test to show how you can abuse this. One of the writers wrote on some webpage optimised for scraping that he’d won some grand book price, and since he’s not famous enough to have tons of sources saying otherwise ChatGPT started copying it as fact. But even things with lot’s of info on it to stop such actions is at risk. We already see Big Tech companies use their algorithms in all their services to influence society in the worst ways to get people addicted and influence politics many times. And since most models are owned by them or big corporates being all friendly with them, they could just as easily decide to put more training’s data in that supports their opinions of favours whatever they want people to think in these models. They could even add total bullshit and the model will tell it to you in the most convincing way. And like that, it becomes the perfect propaganda machine.

Sure, you can try to make extra layers on top to verify output, trying to catch these “hallucinations” and biases. But it never fully will, because no computer system has and understands all knowledge. You can try to maybe create a recreation of all the networks in our brain, even combining it with virtualises version of the chemical reactions. Then in theory you might be able to make a system that matches a human. It sounds like a cool vision, something I personally am very interested in. But we’re no where close to being able to do anything like that, no matter how much the big corporates like to pretend that they are in their marketing techniques. Despite the 50+ years of development the current AI systems have, we’re not having any such a system in sight. And as such, even with the exponentiation growth of AI, it’s not gonna be there any time soon. We, the people around now, might not even live to see it. It’s like a futuristic dream. But even if it does here there, it’s still gonna make mistakes, even more than dedicated computer programs do. Because humans aren’t perfect, and in fact rather inefficient. So when you copy our “design” you’re also copying the flaws.

Anyway, this all makes usages as knowledge machines, search engines, or even to bring life to digital characters in the same category of using a side-effect to archive something. It can work at times, be useful even, but it’s extremely easy to make things way worse if you don’t fully understand it.

After explaining all this I also wanna add an extra point regarding the whole privacy/security part. Because all of this also means you’re never gonna fully stop it from spitting out sensitive information like personal identifiable information (or PII for short) or business secrets. As soon as they are in there, they’re open for the world to see with some prompt engineering. Hence the only way to prevent that from leaking, is to never have it in the training’s data to begin with (and delete any data model that has been trained on it). Outside of that, everything is risk management, where the risk is there in some likelihood that needs to be monitored. It might be that it’s decided it’s an acceptable risk, but it’s leaked data never the less and should have all the same procedures as a any other data leak, including rules related to transparency and punishments. As far as I’m concerned, that means anyone wanting to train a model on PIIs needs to ask permission of the data owner in a transparent and clear way before training (so no, no “unless you opt out before X date we use it” or hiding it in the ToS of a whole different service like a social media platform). And consequences should be dire for failing to do so.

The good

But, the good thing is that all, or at least most, of these issues don’t have to be there. They are not inherent to the technology, but rather an effect of the way it’s used now. We could curate training’s data more, ensure no PII’s are in there that should not be. Make sure this is monitored, and dare to throw away the latest version if it accidentally does contain some even if that means rolling back to an old version that didn’t train on that yet. To be fair, such a policy if enforced would cause much better monitoring that is now often disregarded as “impossible” to be created in no time. We could be transparent when something is AI. We could do proper research about most effective technology and only choose AI when it actually adds enough to be worth the used energy, instead of using and marketing it everywhere and for everything, and we could be normalising and teaching about proper usage and when not to use. As long as those big companies are willing to earn a bit less (not even little. just a bit less) money. Or, since that’s unlikely to ever happen, we force them to with regulation that’s enforced. The biggest issue left is the psychological aspect of people forming relations. Humans do that, with everything. We already tend to personify our bikes (most people will have said things like “don’t fall!” to theirs when trying to park it at moments, at least here in The Netherlands where we have more bikes than people). And most of the time, it’s ok. But we need to go about it in a certain way, and be careful about it. But I will let the exact details of that to people who studied more about it. It’s a whole field of study in and off itself.

But when we solve that, AI can be great!

Healthcare and related uses

The technology has so many things it could improve. While we should be careful what data we upload where, as we don’t want people’s medical data ending up at OpenAI, Musk, Microsoft, Meta, or whoever, there are still options. Especially when we run models in our own medical centres, or therefor dedicated data centres in our own jurisdiction. We already see some of these useful usages right now. For example, it’s used for image recognition to find sicknesses on medical pictures like CT-scans (the technology also has been applied successfully in other fields, like industrial automation). Of course, here too we need to be careful. We don’t want doctors to blindly follow whatever the computer says. But how great is it, if we can find cancer earlier, increasing the changes of curing someone?

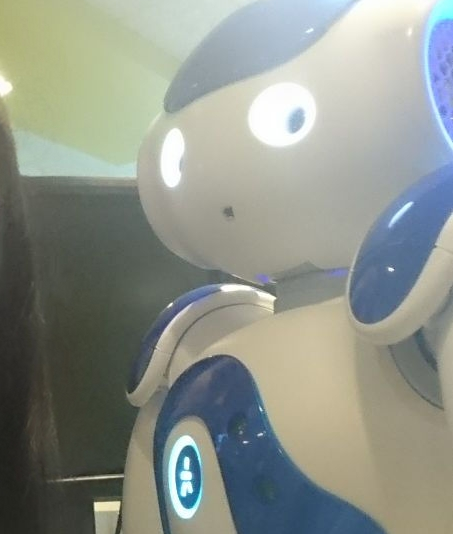

When I was in university, I also was helping with a (what I think was a cool) project. They wanted to use humanoid robots to help autistic children practice recognising social body-language cue’s in a save controlled environment. I programmed a few NAO’s from SoftBank for this back then, while some people studying psychology and teaching worked on the actual plans for interactions. Still got a photo of one of the cute little guys. Initial testing seemed positive, and the kids seemed to quite enjoy it too.

In a more controversial standpoint, I think it can also be helpful in loneliness. Which I will put under healthcare and related, as a lot of psychological issues can created by it. Surely, in a perfect world no one would be an outcast, and while I think it’s good to strive for everyone to have a place I think it’s unrealistic to expect that to be reality. At least not any time soon. But I do admit, it’s a finicky subject easily edging over into unhealthy relationships.

Furthermore, since ANNs are based on how we think our brains work, they can be used to study our on psychology. I remember when I was seeing those androids and got into AI there where places in Japan using it for exactly this. Simply put, even the best sociologist has bias, but by letting a AI learn from the human behaviour it sees can give you objective information (where the AI’s bias is the people it looked at to learn). Of course, understanding what that AI took from that learning is a whole other kind of sport, and as we touched currently impossible. But it’s an interesting approach. But there is more to it, HRI can be used to study human behaviour as well. And the “ANNs are based on how we think our brain works” isn’t a one-way-road either. The things we learn about ANNs can in certain cases be applied to other living being neurology.

Outside of healthcare

Even the current things, like LLM’s, can be used in a good way. I’m gonna focus on LMMs now for this part, alinea, but similar things can be said about other types of ANNs and other things we might consider AI. That means, don’t use it for something it’s not made for, like providing information, but use it for what it does well. LLM’s are great at analysing language, using it to help you rewrite your own sentences in a more readable way is useful. That is, assuming you read the output and adjust where needed. It can also be used to practice conversational flows. It knows quite well what kind of reactions come on what, if anything, that’s the one thing it knows. And while practicing with humans is good, sometimes better, it’s not always an option for everyone. Not everyone has someone who can help with everyone, and even when they do they may feel to shy or ashamed to ask help. Such practice can help not only learning what to do, but also help create confidence to do it with real people. They can also be used to brain storm with. You know, that moment when all ideas are welcome no matter how stupid, which you then later filter out? All examples of usages LLMs are good at, where hallucinations and such don’t cause much issues as they are filtered or only kept in clear hypothetical practice environments. Even if we do want to take environment into account, I don’t think every use is bad. Some gains might be so big they are worth the resources (and others, especially in this field, might not) and many tasks could be done with similar results with smaller more optimised models (so technically not LLMs, but still language models), especially when combining them with other technologies.

AI that creates “art” like image and music generators can be used in nice ways as well. I remember back in the day, Vocaloid (a singing vocal synthesiser by Yamaha) had this nice description somewhere that it wanted to give a voice to people who had no singing skills. I thought that was a nice way of looking at it. But using synthesising software still requires certain skills not everyone has. For all the people who worry about AI copying there art, I kinda expect there are people who have no artistic skills or simply not much talent, and people with either no resources or who don’t think it’s worth the resources, but do have good ideas (that might even be turned out worth the resources afterwards, but it’s easy speaking afterwards).

And it’s also good for research. Just as it has been researched for 50+ years without causing the big issues we see now, there is no reason to now keep doing that. Because the technology is interesting, and could do even much cooler things in the future that will make is laugh at how dump and common ChatGPT is. Much like how we now don’t consider those mouse pointer icons changing anything special anymore but it once having been AI.

Next to those much more actually fitting applications do exist, and no doubt more will be found. And even if all of the “useful” reasons are put aside, there is still fun. AI can be fun, and when we do that while preventing causing problems, why not? Fun brings happiness, happiness is good for everyone. I for one cannot wait for (what I consider) ethically AI-power robot buddies 🤖!